This blog was originally conceived as a personal reference. Later I added notes and my publications

Saturday, January 30, 2021

Portable Audio Voice Recorder MP3 Player

Display: 128x64 screen

Built-in mic: omni-directional microphone

Weight: 34g

Size L*W*H: 8.5*3.7*1.3 cm / 3.35*1.4*0.51 in

External input: Stereo earphone 3.5mm stereo socket

USB cable: PC interface USB 1.1/USB 2.0

Battery: 3.7V/300mA Polymer Battery

Max output: 300 mW

S/N(ratio): 76 db

Extenal dynamic: 20mm, 16 Ohm

Available in Slinya Company Store

Microphone holes are for decoration. The voice recorder has a monophonic recording. The recorded sound is dull. There is also background noise while recording. The voice recorder often spontaneously loses the set date and time.

Портативный аудио диктофон и MP3-плеер

Thursday, January 28, 2021

Image "Cloaking" for Personal Privacy

Emily Wenger†, PhD Student

Jiayun Zhang, Visiting Student

Huiying Li, PhD Student

Haitao Zheng, Professor

Ben Y. Zhao, Professor

† Project co-leaders and co-first authors

NEWS

4-23: v1.0 release for Windows/MacOS apps and Win/Mac/Linux binaries!4-22: Fawkes hits 500,000 downloads!

1-28: Adversarial training against Fawkes detected in Microsoft Azure (see below)

1-12: Fawkes hits 335,000 downloads!

8-23: Email us to join the Fawkes-announce mailing list for updates/news on Fawkes

8-13: Fawkes paper presented at USENIX Security 2020

News: Jan 28, 2021. It has recently come to our attention that there was a significant change made to the Microsoft Azure facial recognition platform in their backend model. Along with general improvements, our experiments seem to indicate that Azure has been trained to lower the efficacy of the specific version of Fawkes that has been released in the wild. We are unclear as to why this was done (since Microsoft, to the best of our knowledge, does not build unauthorized models from public facial images), nor have we received any communication from Microsoft on this. However, we feel it is important for our users to know of this development. We have made a major update (v1.0) to the tool to circumvent this change (and others like it). Please download the newest version of Fawkes below.

2020 is a watershed year for machine learning. It has seen the true arrival of commodized machine learning, where deep learning models and algorithms are readily available to Internet users. GPUs are cheaper and more readily available than ever, and new training methods like transfer learning have made it possible to train powerful deep learning models using smaller sets of data.

...

Publication & Presentation

Fawkes: Protecting Personal Privacy against Unauthorized Deep Learning Models.Shawn Shan, Emily Wenger, Jiayun Zhang, Huiying Li, Haitao Zheng, and Ben Y. Zhao.

In Proceedings of USENIX Security Symposium 2020. ( Download PDF here )

Downloads and Source Code - v1.0 Release!

NEW! Fawkes v1.0 is a major update. We made the following updates to significantly improve the protection and software reliability.We updated the backend feature extractor to the-state-of-art ArcFace models.

We injected additional randomness to the cloak generation process through randomized model selection.

We migrated the code base from TF 1 to TF 2, which resulted in a significant speedup and better compatibility.

Other minor tweaks to improve protection and minimize image perturbations.

Download the Fawkes Software:

(new) Fawkes.dmg for Mac (v1.0)

DMG file with installer app

Compatibility: MacOS 10.13, 10.14, 10.15, 11.0(new)

Fawkes.exe for Windows (v1.0)

EXE file

Compatibility: Windows 10

Setup Instructions: For MacOS, download the .dmg file and double click to install. If your Mac refuses to open because the APP is from an unidentified developer, please go to System Preference>Security & Privacy>General and click Open Anyway.

Download the Fawkes Executable Binary:

Fawkes binary offers additional options on selecting different parameters. Check here for more information on how to select the best parameters for your use case.

Download Mac Binary (v1.0)

Download Windows Binary (v1.0)

Download Linux Binary (v1.0)

For binary, simply run "./protection -d imgs/"

Fawkes Source Code on Github, for development.

...

Frequently Asked Questions

- How effective is Fawkes against 3rd party facial recognition models like ClearView.ai?

We have extensive experiments and results in the technical paper (linked above). The short version is that we provide strong protection against unauthorized models. Our tests against state of the art facial recognition models from Microsoft Azure, Amazon Rekognition, and Face++ are at or near 100%. Protection level will vary depending on your willingness to tolerate small tweaks to your photos. Please do remember that this is a research effort first and foremost, and while we are trying hard to produce something useful for privacy-aware Internet users at large, there are likely issues in configuration, usability in the tool itself, and it may not work against all models for all images. - How could this possibly work against DNNs? Aren't they supposed to be smart?

This is a popular reaction to Fawkes, and quite reasonable. We hear often in popular press how amazingly powerful DNNs are and the impressive things they can do with large datasets, often detecting patterns where human cannot. Yet the achilles heel for DNNs has been this phenomenon called adversarial examples, small tweaks in inputs that can produce massive differences in how DNNs classify the input. These adversarial examples have been recognized since 2014 (here's one of the first papers on the topic), and numerous defenses have been proposed over the years since (and some of them are from our lab). Turns out they are extremely difficult to remove, and in a way are a fundamental consequence of the imperfect training of DNNs. There have been multiple PhD dissertations written already on the subject, but suffice it to say, this is a fundamentally difficult thing to remove, and many in the research area accept it now as a necessary evil for DNNs.

The underlying techniques used by Fawkes draw directly from the same properties that give rise to adversarial examples. Is it possible that DNNs evolve significantly to eliminate this property? It's certainly possible, but we expect that will require a significant change in how DNNs are architected and built. Until then, Fawkes works precisely because of fundamental weaknesses in how DNNs are designed today. - Can't you just apply some filter, or compression, or blurring algorithm, or add some noise to the image to destroy image cloaks?

As counterintuitive as this may be, the high level answer is no simple tools work to destroy the perturbation that form image cloaks. To make sense of this, it helps to first understand that Fawkes does not use high-intensity pixels, or rely on bright patterns to distort the classification value of the image in the feature space. It is a precisely computed combination of a number of pixels that do not easily stand out, that produce the distortion in the feature space. If you're interested in seeing some details, we encourage you to take a look at the technical paper (also linked above). In it we present detailed experimental results showing how robust Fawkes is to things like image compression and distortion/noise injection. The quick takeaway is that as you increase the magnitude of these noisy disruptions to the image, protection of image cloaking does fall, but slower than normal image classification accuracy. Translated: Yes, it is possible to add noise and distortions at a high enough level to distort image cloaks. But such distortions will hurt normal classification far more and faster. By the time a distortion is large enough to break cloaking, it has already broken normal image classification and made the image useless for facial recognition. - How is Fawkes different from things like the invisibility cloak projects at UMaryland, led by Tom Goldstein, and other similar efforts?

Fawkes works quite differently from these prior efforts, and we believe it is the first practical tool that the average Internet user can make use of. Prior projects like the invisibility cloak project involve users wearing a specially printed patterned sweater, which then prevents the wearer from being recognized by person-detection models. In other cases, the user is asked to wear a printed placard, or a special patterned hat. One fundamental difference is that these approaches can only protect a user when the user is wearing the sweater/hat/placard. Even if users were comfortable wearing these unusual objects in their daily lives, these mechanisms are model-specific, that is, they are specially encoded to prevent detection against a single specific model (in most cases, it is the YOLO model). Someone trying to track you can either use a different model (there are many), or just target users in settings where they can't wear these conspicuous accessories. In contrast, Fawkes is different because it protects users by targeting the model itself. Once you disrupt the model that's trying to track you, the protection is always on no matter where you go or what you wear, and even extends to attempts to identify you from static photos of you taken, shared or sent digitally. - How can Fawkes be useful when there are so many uncloaked, original images of me on social media that I can't take down?

Fawkes works by training the unauthorized model to learn about a cluster of your cloaked images in its "feature space." If you, like many of us, already have a significant set of public images online, then a model like Clearview.AI has likely already downloaded those images, and used them to learn "what you look like" as a cluster in its feature space. However, these models are always adding more training data in order to improve their accuracy and keep up with changes in your looks over time. The more cloaked images you "release," the larger the cluster of "cloaked features" will be learned by the model. At some point, when your cloaked cluster of images grows bigger than the cluster of uncloaked images, the tracker's model will switch its definition of you to the new cloaked cluster and abandon the original images as outliers. - Is Fawkes specifically designed as a response to Clearview.ai?

It might surprise some to learn that we started the Fawkes project a while before the New York Times article that profiled Clearview.ai in February 2020. Our original goal was to serve as a preventative measure for Internet users to inoculate themselves against the possibility of some third-party, unauthorized model. Imagine our surprise when we learned 3 months into our project that such companies already existed, and had already built up a powerful model trained from massive troves of online photos. It is our belief that Clearview.ai is likely only the (rather large) tip of the iceberg. Fawkes is designed to significantly raise the costs of building and maintaining accurate models for large-scale facial recognition. If we can reduce the accuracy of these models to make them untrustable, or force the model's owners to pay significant per-person costs to maintain accuracy, then we would have largely succeeded. For example, someone carefully examining a large set of photos of a single user might be able to detect that some of them are cloaked. However, that same person is quite possibly capable of identifying the target person in equal or less time using traditional means (without the facial recognition model). - Can Fawkes be used to impersonate someone else?

The goal of Fawkes is to avoid identification by someone with access to an unauthorized facial recognition model. While it is possible for Fawkes to make you "look" like someone else (e.g. "person X") in the eyes of a recognition model, we would not consider it an impersonation attack, since "person X" is highly likely to want to avoid identification by the model themselves. If you cloaked an image of yourself before giving it as training data to a legitimate model, the model trainer can simply detect the cloak by asking you for a real-time image, and testing it against your cloaked images in the feature space. The key to detecting cloaking is the "ground truth" image of you that a legitmate model can obtain, and unauthorized models cannot. How can I distinguish photos that have been cloaked from those that have not? A big part of the goal of Fawkes is to make cloaking as subtle and undetectable as possible and minimize impact on your photos. Thus it is intentionally difficult to tell cloaked images from the originals. We are looking into adding small markers into the cloak as a way to help users identify cloaked photos. More information to come. - How do I get Fawkes and use it to protect my photos?

We are working hard to produce user-friendly versions of Fawkes for use on Mac and Windows platforms. We have some initial binaries for the major platforms (see above). Fawkes is also available as source code, and you can compile it on your own computer. Feel free to report bugs and issues on github, but please bear with us if you have issues with the usability of these binaries. Note that we do not have any plans to release any Fawkes mobile apps, because it requires significant computational power that would be challenging for the most powerful mobile devices.

Full text is Image "Cloaking" for Personal Privacy

Wednesday, January 27, 2021

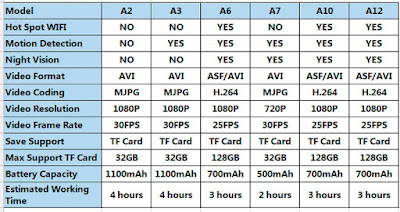

Vandlion A3 Mini Camcorder

The Video as provides basic and advanced practices activists can use to increase the likelihood that their footage can serve as evidence in criminal and civil justice processes, for advocacy, and by the media. It also aims to help activists and lawyers better collaborate on capturing and collecting valuable video evidence.

This camera supports the micro sd card of high speed class 10 or above only. Law enforcement instrument with flashlight.

- Model Number: A3

- Recording Format: WAV format with 256Kbps

- Video Format: AVI format with 1920*1080P

- Frame Number: 30FPS;

- Video Code: MJPEG; Image Ratio: 4:3

- Sampling Audio Rate: 48khz

- Photo Size: 1920x1080

- Size: 90*25*11mm

- USB Connector: USB 2.0 High Speed

- Support TF Memory Card: 4GB to 128GB

- Battery Capacity: 3.7V/1100mA lithium battery

- Battery Recording Time: About 3-4 hours

- Weight: 78g

- 8GB TF Card Saving Time: Recording for about 280 minutes; Filming for about 80 minutes

- Working Temperature: -5 to 40 degree

- Compatible System: Windows 2000 & above

2020-07-07 23:59:59 motion:1 Y0Y

Y - show time;

0 - 1080P, 1 - 720P, 2 - 480P;

Y - loop video

Vandlion A3 Mini Camcorder freeze frame

Nice camera with good recording qualityAvailable in store

Мини видеокамера Vandlion A3

Monday, January 25, 2021

Waterproof Dry Storage Bionic Camo 75L Bag

Plug-in Material: UTX-DURAFLEX

Waterproof index: greater than or equal to 5000

Color: maple leaf tree

Capacity: 75L

Unfolding Size: 34*90 cm

Weight: 115g One can put food, clothes, wallet and other personal belongings. Bag can be compressed, to be convenient to carry.

Waterproof Dry Storage Bionic Camo 75L Bag on 5.11 Tactical TDU Multicam Shirt for pattern comparison

Available in store, store

Водонепроницаемый мешок для хранения на 75л в расцветки бионический камуфляж

Saturday, January 16, 2021

U-Disk SK Digital Audio Voice Recorder

Recording Format : WAV

Recording bit rate: 160 Kbps

Voice Activated Recording: No

Battery: Built-in Rechargeable Li-battery

PC-interface: USB 2.0

Battery: Built-in 100mAh Li-ion

Battery time: about 13 hours

Output: Play on computer

System support: Win7, XP, Win8 or above

Color: Black

Material: ABS

Product weight: 12g

Size: 70x22x10mm

Available in store, store, shops

Цифровой диктофон U-Disk SK